this post was submitted on 02 Mar 2025

930 points (97.6% liked)

Bluesky

1325 readers

288 users here now

People skeeting stuff.

Bluesky Social is a microblogging social platform being developed in conjunction with the decentralized AT Protocol. Previously invite-only, the flagship Beta app went public in February 2024. All are welcome!

founded 6 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

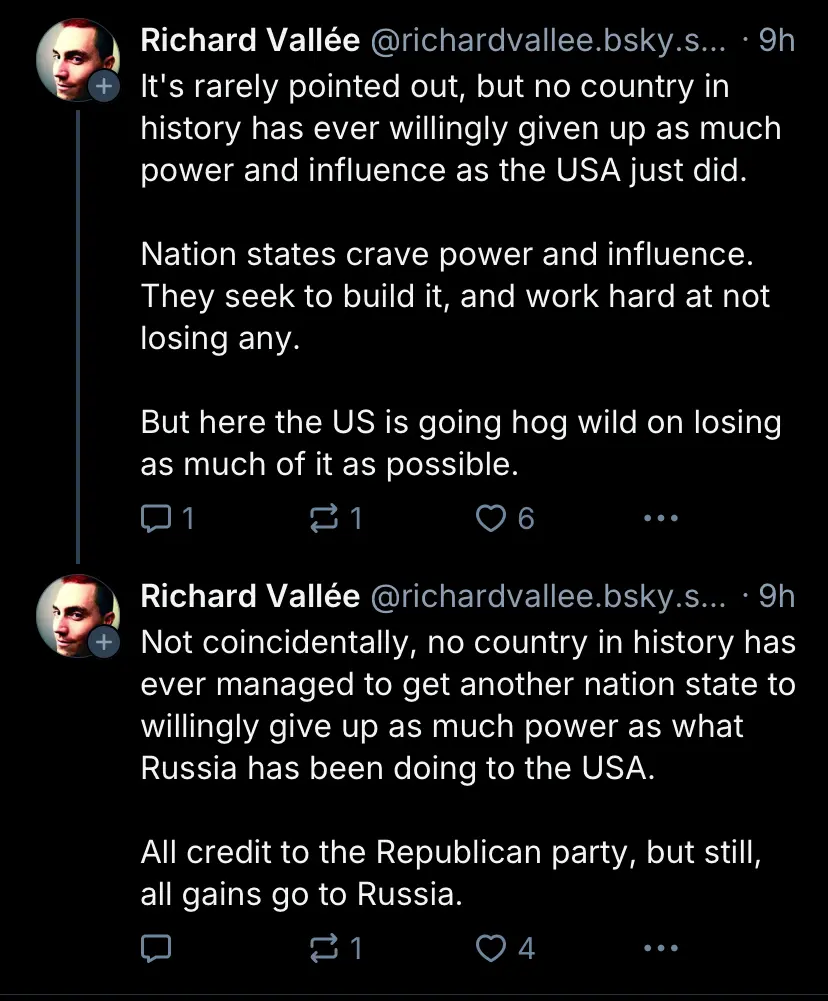

"Russian interference" is not just a few hackers breaking into emails—it’s a well-documented, multi-decade strategy of disinformation designed to weaken democratic institutions. The Kremlin has spent years building an extensive network of fake social media accounts, bot farms, and propaganda outlets to spread divisive narratives.

The Senate Intelligence Committee, the FBI, and cybersecurity experts have all confirmed that Russia’s influence campaigns exploit social and political fractures, using platforms like Facebook and Twitter to push misleading or outright false information. Reports from organizations like the RAND Corporation and Stanford Internet Observatory show how these tactics are designed to erode trust in democracy itself, making people more susceptible to authoritarian and extremist messaging.

This isn’t just speculation—it’s the exact playbook used in Russia’s interference in the 2016 and 2020 elections, as confirmed by U.S. intelligence agencies and the Mueller Report. The goal has always been to amplify distrust, push conspiracy theories, and create a populace that can no longer distinguish fact from fiction.

And now? We’re seeing the results. A country where misinformation spreads faster than the truth, where people take social media posts at face value instead of questioning their sources, and where a populist leader can ride that wave of disinformation straight into power.

Putin doesn’t need to fire a single shot—he’s watching Americans tear themselves apart over lies his operatives helped plant. And the worst part? Many people still refuse to acknowledge it's happening.

Putin has long stated that Russia is at war with the West—not through traditional military means, but through information warfare. Intelligence agencies, cybersecurity experts, and independent researchers have repeatedly warned that we are being targeted. Yet, many in the West refused to take it seriously.

Now, we’re losing the war—not on the battlefield, but in the minds of our own citizens, as propaganda and disinformation tear at the very fabric of democracy.

We need to be able to identify their tactics when we see them.

I've personally noticed that there's a certain kind of user who will always reply no matter what. I think it's part of their job to never concede so it looks like they've won when people on the other side move on with their lives.

I've noticed the same thing. Some users seem less interested in genuine discussion and more focused on exhausting the conversation until the other person gives up. It’s not about exchanging ideas—it's about persistence, repetition, and making it seem like they've "won" just because they got the last word.

I've encountered accounts that mostly follow this pattern:

I only started wondering if some of these accounts might be Russian bots last week, but your comment is making me think my suspicion wasn’t just alarmist. Now I’m genuinely curious—have others been running into the same thing?