this post was submitted on 22 Aug 2025

140 points (97.9% liked)

Fediverse

36273 readers

341 users here now

A community to talk about the Fediverse and all it's related services using ActivityPub (Mastodon, Lemmy, KBin, etc).

If you wanted to get help with moderating your own community then head over to !moderators@lemmy.world!

Rules

- Posts must be on topic.

- Be respectful of others.

- Cite the sources used for graphs and other statistics.

- Follow the general Lemmy.world rules.

Learn more at these websites: Join The Fediverse Wiki, Fediverse.info, Wikipedia Page, The Federation Info (Stats), FediDB (Stats), Sub Rehab (Reddit Migration)

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Yes, I see this there. Most of the nginx config is from the 'default' nginx config in the Lemmy repo from a few years ago. My understanding is somewhat superficial - I don't actually know where the variable '$proxy_add_x_forwarded_for' gets populated, for example. I did not know that this contained the client's IP.

I need to do some reading 😁

https://nginx.org/en/docs/http/ngx_http_proxy_module.html

$proxy_add_x_forwarded_foris a built-in variable that either adds to the existing X-Forwarded-For header, if present, or adds the XFF header with the value of the built-in$remote_ipvariable.The former case would be when Nginx is behind another reverse proxy, and the latter case when Nginx is exposed directly to the client.

Assuming this Nginx is exposed directly to the clients, maybe try changing the bottom section like this to use the

$remote_addrvalue for the XFF header. The commented one is just to make rolling back easier. Nginx will need to be reloaded after making the change, naturally.Thanks!

I was able to crash the instance for a few minutes, but I think I have a better idea of where the problem is. Ths $emote_addr variable seems to work just the same.

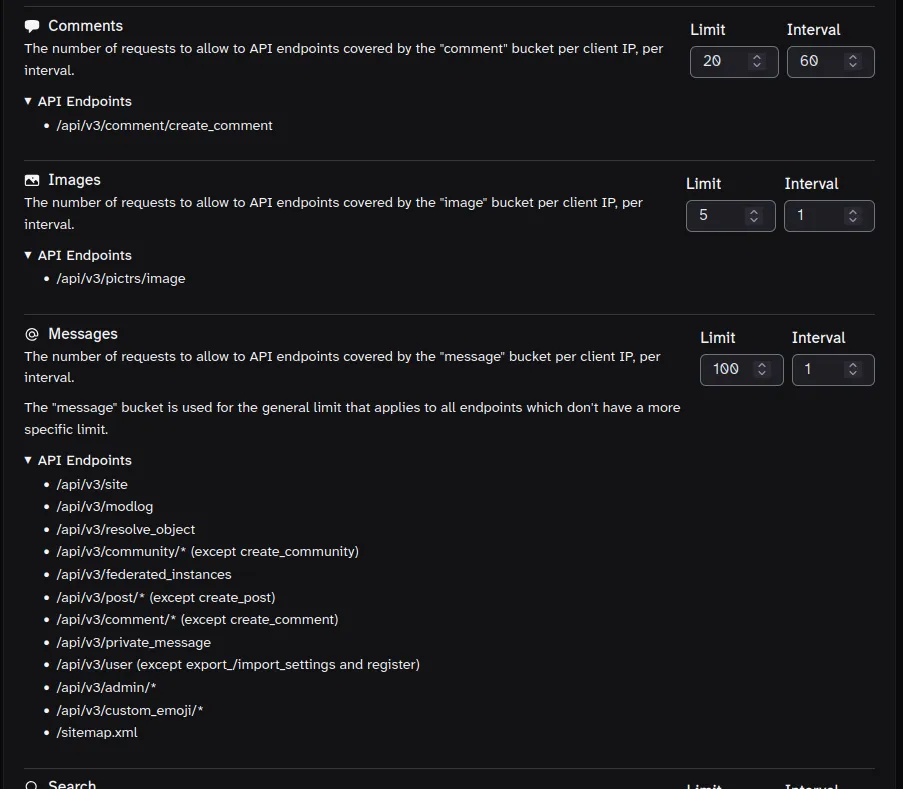

In the rate limit options there is a limit for ''Message''. Common sense tells me that this means 'direct message', but setting this to a low number is quite bad. While testing I eventually set it to '1 per minute' and the instance became unresponsive until I modified the settings in the database manually. If I give a high number to this setting then I can adjust the other settings without problem.

"Message" bucket is kind of a general purpose bucket that covers a lot of different endpoints. I had to ask the lemmy devs what they were back when I was adding a config section in Tesseract for the rate limits.

These may be a little out of date, but I believe they're still largely correct:

So, ultimately my problem was that I was trying to set all of the limits to what I thought were "reasonable" values simultaneously, and misunderstood what 'Message' meant, and so I ended up breaking things with my changes without the reason being obvious to me. I looked into the source code and I can see now that indeed 'Messages' refer to API calls and not direct messages, and that there is no 'Direct Message' rate limit.

If I let 'Messages' stay high I can adjust the other values to reasonable values and everything works fine.

Thanks a lot for your help!! I am surprised and happy it actually worked out and I understand a little more 😁

Hi I think I set the messages too low as well and now no.lastname.nz is down, pointers on how to fix with no frontend?

If you have DB access, the values are in the

local_site_rate_limittable. You'll probably have to restart Lemmy's API container to pick up any changes if you edit the values in the DB.100 per second is what I had in my configuration, but you may bump that up to 250 or more if your instance is larger.

Thanks:

UPDATE local_site_rate_limit SET message = 999, message_per_second = 999 WHERE local_site_id = 1;Awesome! Win-win.

😁 👍