Probably.

BlueMonday1984

On this specifically, no, but Character.ai has gotten hit with more lawsuits - one for encouraging a kid to kill his parents, and one for grooming one kid and fucking up another.

Giving my off-the-cuff thoughts, these two suits are likely gonna further push the public to view AI as inherently harmful to kids, if not inherently harmful in general.

Found a couple QRTs cooking the guy which caught my attention:

https://twitter.com/denimneverdies/status/1872364569743786286

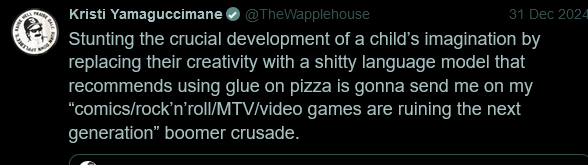

https://twitter.com/TheWapplehouse/status/1873915404529406462

It took me a good minute or so to realise this was satire. Nice find.

I mean, at least it’s not some stupid vaguery that armchair dullards will argue in circles forever about word meanings and minor technicalities that have nothing to do with AI and everything to do with their own malformed views of reality and humans behavior.

They're called "programmers". They think it makes them sound smart.

Well-known YouTuber Markiplier also went off on Honey back in 2019, and needless to say he felt pretty vindicated:

In other news, Character.AI has ended up in the news again for allowing school shooter chatbots to flourish on its platform.

You want my off-the-cuff take, this is definitely gonna fuck c.ai's image even further, and could potentially leave them wide open to a lawsuit.

On a wider front, this is likely gonna give AI another black eye, and push us one step further to the utter destruction of AI as a concept I predicted a couple months ago.

Personally, my money's on them being thoroughly lost in the AI safety sauce - the idea of AI going rogue has been a staple in pop culture for quite a long time (TV Tropes lists a lot of examples), and the relentless anthropomorphisation of LLMs makes it pretty easy to frame whatever fuck-ups they make as a sentient AI pulling malicious shit.

And given man's long and storied history of manipulating and misleading their fellow man, I can see plenty of opportunity for fuck-ups baked directly into your average LLM's training data.