I played a bit with img2img and no prompt (or just a style, like anime) And even though my first reaction was *Oh cool, I'm a girl ! * to Why does AI always turns me in a girl ?

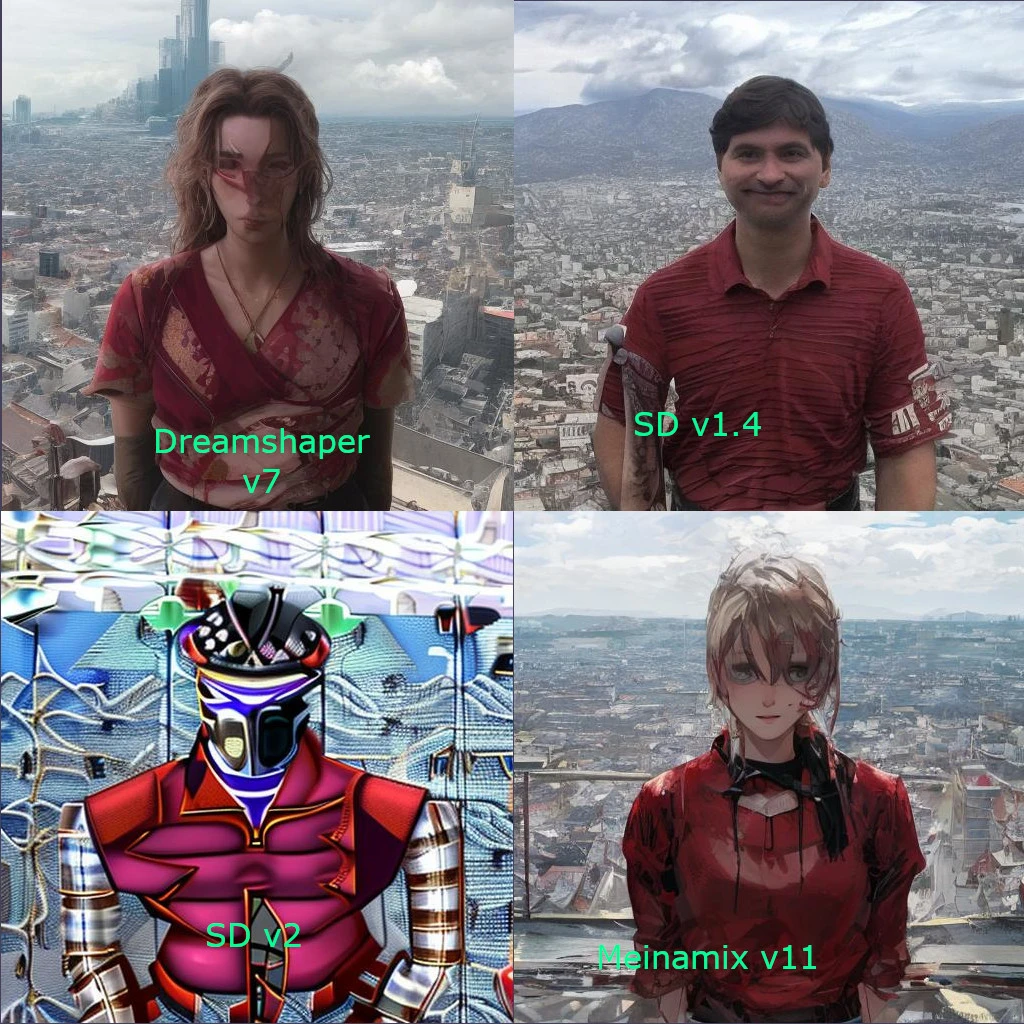

Here is a little experiment starting with the same image, a photo of a dude with a red T-shirt with a city panorama in the background (I won't publish the original photo), and I give zero other guidance (no prompt, no style) The two stable diffusion model would give "male character' while Dreamshaper and Meinamix would give feminine character. I've done a second generation with Meinamix and Dreamshaper giving female character again.

Second experiment Same dude as above (OP) but with long hair and a pink-doublet over a white shirt. Here I would understand that AI gets confused (Even though I don't see why only girl could get long hair and wear pink clothe)

So the result, is like Dreamshaper and Meinamix are pretty close from my original clothing. Stable Diffusion 1.4 and Meinamix definitly choose a lady, Dreamspaher is more ambiguous but still on a feminine part of the gender spectrum, and stable diffusion 2 gives shit but let's say that the beards make-it male. But again, these model do pretty great at "drawing my clothing" but seems biased toward female-passing result.

While writing that post I've done a few new generations with meinamix I got 2 guys out of 6 images (1 out of 6 with Dreamshaper), so here is one pretty close of the original photo

Obviously, it's not a statistical study, I know that I could easily add a "negative prompt". But this triggered my curiosity and was a fun experiment to do.

You can see model biases by using a model with a blank positive prompt and a negative prompt to "low quality". Run 20 gens and you'll see what the model likes to lean into.

You can also do gentle inferences with a token for positive prompt. For example, if you are trying to find color biases something like Red_Outfit with the low quality negative prompt. However once you start adding more tokens it mostly goes out the window so it's not something to rely on so much as to get a sense for what might be more likely to occur.

Very helpful for testing models you get from civitai or wherever else