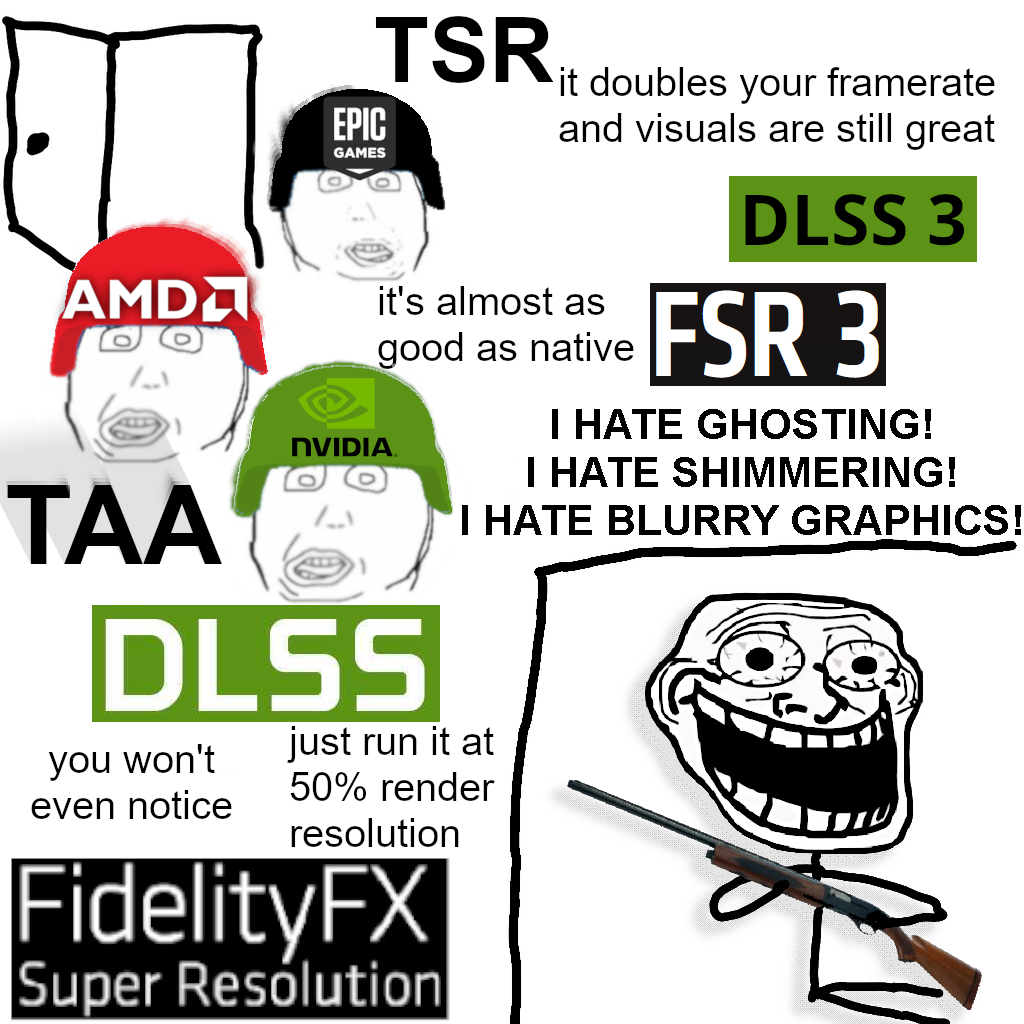

I'll be completely honest, that's probably the coldest take someone can make about recent tech that I've seen, and it's being presented as a hot take.

Virtually everyone prefers native, almost aggressively so. That being said, I think there's important nuance that's missing in most talks about upscaling. In my testing, my experience of blurring and smearing with upscaling/frame gen seems to be hugely dependent on pixel density. If you get a really dense screen, then upscaling, in my experience at least, becomes virtually undetectable even at 1080p.