Video encoding you've really got 2 clear options: Either a 8th gen or newer consumer Intel chip with integrated graphics for QuickSync support or toss a GPU in there. You can also rely on raw CPU cycles for video transcode but that's wildly energy inefficient in comparison.

I've heard good things about how anything AM4 compares to x99 era Intel on both raw performance and performance per watt, but I have no personal anecdata to share.

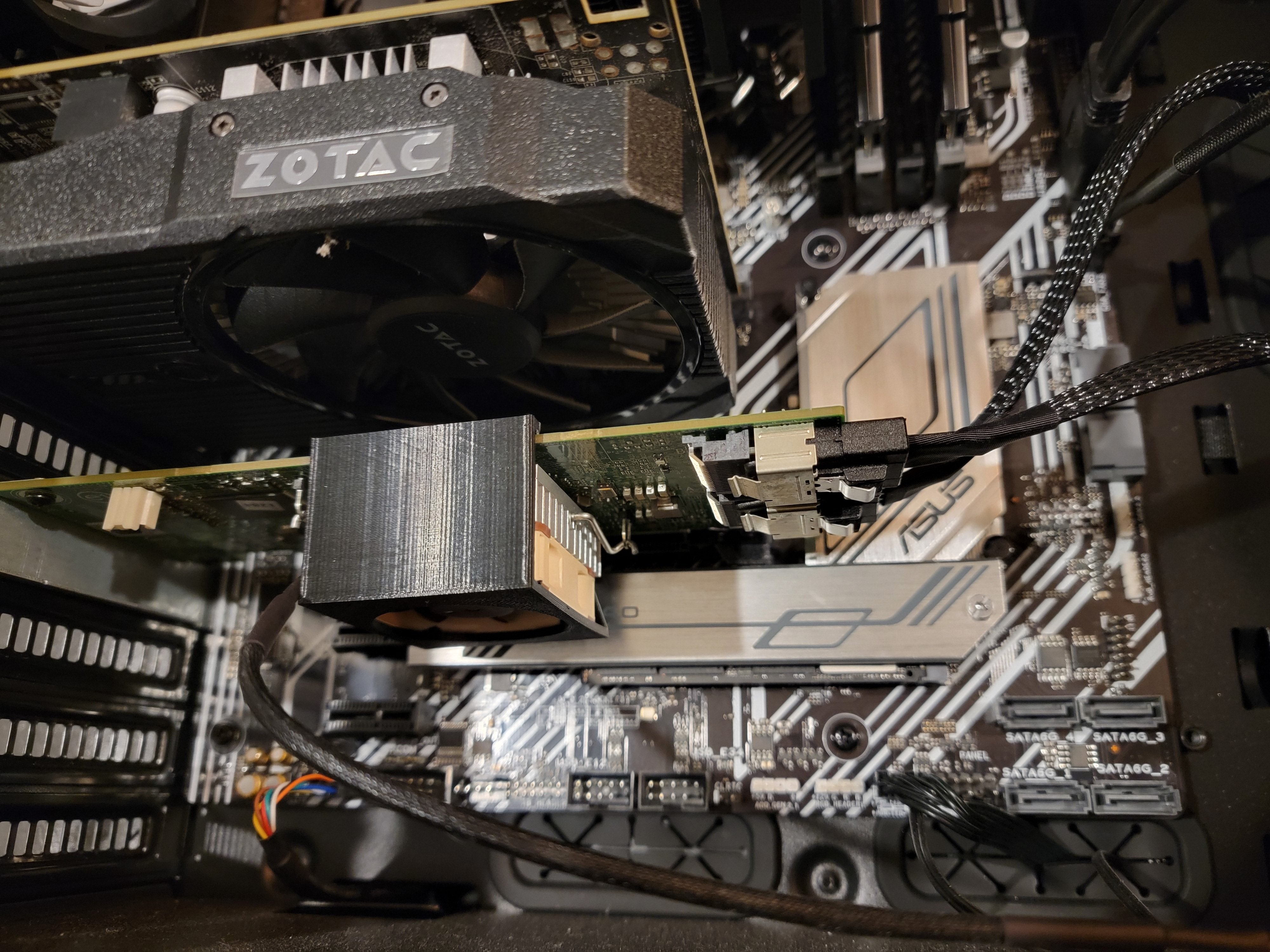

Personally I'm currently eyeing up a gaming computer refresh as the opportunity to refresh my primary server with the old components from the gaming computer, but I'm also starting with literal ewaste I scrounged for free, so pretty much anything is big upgrade.