196

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

Other rules

Behavior rules:

- No bigotry (transphobia, racism, etc…)

- No genocide denial

- No support for authoritarian behaviour (incl. Tankies)

- No namecalling

- Accounts from lemmygrad.ml, threads.net, or hexbear.net are held to higher standards

- Other things seen as cleary bad

Posting rules:

- No AI generated content (DALL-E etc…)

- No advertisements

- No gore / violence

- Mutual aid posts are not allowed

NSFW: NSFW content is permitted but it must be tagged and have content warnings. Anything that doesn't adhere to this will be removed. Content warnings should be added like: [penis], [explicit description of sex]. Non-sexualized breasts of any gender are not considered inappropriate and therefore do not need to be blurred/tagged.

If you have any questions, feel free to contact us on our matrix channel or email.

Other 196's:

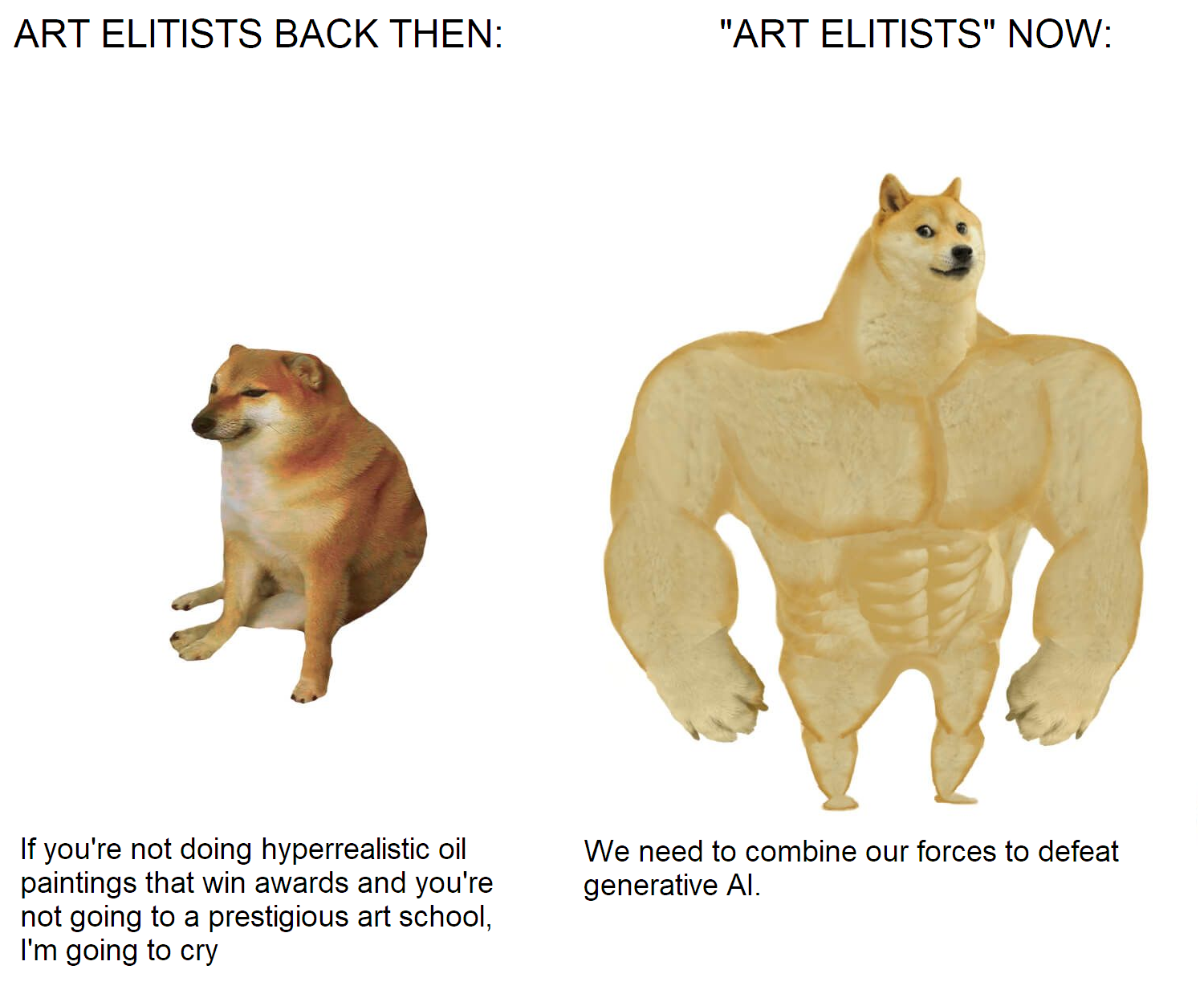

You can't "defeat" AI. It's not an organization or a group to fight against; it's technological progress.

The weavers' uprisings didn't stop the Industrial Revolution either. AI is a tool, and those who learn to handle and adapt to it the fastest will be the ones who fare the best.

The weavers fought the capitalist system not industrial progress with the weaving machines being the easiest target as the where expensive to build, but highly profitable.

Same here: The goal is the overcoming of capitalism but until then we can annoy them by messing with their new toys.

People said the same about NFTs, and they suddenly disappeared...

With enough "bullying", we can force the genie back into its lamp. We just need to learn more from anti-GMO karens.

It's not even a matter of bullying: NFTs disappeared because they were fundamentally not viable, and there's a good chance that generative AI is also not viable.

Generating an output is extremely computationally expensive, which is a problem because you need several attempts to get an acceptable output (at least in terms of images). This service can't stay free or cheap forever, and once it starts being expensive, that's also a problem in itself since generative AI is most suited to generate large amounts of low-profit content.

For example, earlier this month, Deviantart highlighted a creator that they claimed to be one of their highest earners; they made $25k "in less than a year", which is not much for the highest earner, and they did it by posting over NINE THOUSAND images in that time. They were selling exlusives for less than $10.

The only way this makes sense is if it's really cheap to generate that many images. Even a moderate price, multiplied by 9000, multiplied by the number of attempts each time, would have destroyed their already middling profit.

Writers rise up

After 1945, they made sure no one is denied from art school anymore

The thing I hate most about this artist is that he can actually be funny sometimes, when he's not being a bigoted troll. Which is most of the time.

Like, how dare that asshole make me laugh at something he made?

Absolutely.

I didn't even notice, then in my panic I replied to my own comment instead of editing.

I'll leave the whole mess up now - mea culpa.

He can be really subtle in his messaging, too.

Like, reading this comic as a normal person, I see a ha-ha funny joke about the robot doing a hitler. Why does being rejected from art school make him do this? Uh... I don't know. It's just a reflection on an old story, don't think too much about it.

Viewing this comic through the lense of a nazi, however, doesn't it seem a little bit like a call to action? As if it's excusing the violence the ~~nazi~~ robot will engage in as a kind of justice for people's dismissal of AI art?

The only thing you have to do to reach that second conclusion is not believe that the nazi outcome is a bad one.

Anyway, I dunno. I'll never know what Stonetoss really meant, I just think it's interesting.

Edit: I don't normally like stonetoss. Can I have my comment back?

Nobody has been able to make a convincing argument in favour of generative AI. Sure, it's a tool for creating art. It abstracts the art making process away so that the barrier to entry is low enough that anyone can use it regardless of skill. A lot of people have used these arguments to argue for these tools, and some artists argue that because it takes no skill it is bad. I think that's beside the point. These models have been trained on data that is, in my opinion, both unethical and unlawful. They have not been able to conclusively demonstrate that the data was acquired and used in line with copyright law. That leads to the second, more powerful argument: they are using the labour of artists without any form of compensation, recognition, permission, or credit.

If, somehow, the tools could come up with their own styles and ideas then it should be perfectly fine to use them. But until that happens (it won't, nobody will see unintended changes in AI as anything other than mistakes because it has no demonstrable intent) use of a generative AI should be seen as plagiarism or copyright infringement.

How does copyright law cover this?

Copyright gives the copyright holder exclusive rights to modify the work, to use the work for commercial purposes, and attribution rights. The use of a work as training data constitutes using a work for commercial purposes since the companies building these models are distributing licencing them for profit. I think it would be a marginal argument to say that the output of these models constitutes copyright infringement on the basis of modification, but worth arguing nonetheless. Copyright does only protect a work up to a certain, indefinable amount of modification, but some of the outputs would certainly constitute infringement in any other situation. And these AI companies would probably find it nigh impossible to disclose specifically who the data came from.

Copyright gives the copyright holder exclusive rights to modify the work, to use the work for commercial purposes, and attribution rights.

Copyright remains a system of abuse that empowers large companies to restrict artistic development than it does encourage artists. Besides which, you're failing to consider transformative work.

As it is, companies like Disney, Time Warner and Sony have so much control over IP that artists can't make significant profit without being controlled by those companies, and then only a few don't get screwed over.

There are a lot of valid criticisms about AI, but the notion that training them on work gated by IP law is not one of them... Unless you mean to also say that human beings cannot experience the work either.

Training AI pretty much falls under fair use, as far as I'm aware... https://www.eff.org/deeplinks/2023/04/how-we-think-about-copyright-and-ai-art-0

Rare EFF L.

Well, current law is not written with AI in mind, so what current law says about the legality of AI doesn't reflect its morality or how we should regulate it in the future

EFF does some good stuff elsewhere, but I don't buy this. You can't just break this problem down to small steps and then show for each step how this is fine when considered in isolation, while ignoring the overall effects. Simple example from a different area to make the case (came up with this in 2 minutes so it's not perfect, but you can craft this out better):

Step 1: Writing an exploit is not a problem, because it's necessary that e.g., security researchers can do that.

Step 2: Sending a database request is not a problem, because if we forbid it the whole internet will break

Step 3: Receiving freely available data from a database is not a problem, because otherwise the internet will break

Conclusion: We can't say that hacking into someone else's database is a problem.

What is especially telling about the "AI" "art" case: The major companies in the field are massively restrictive about copyright elsewhere, as long as it's the product of their own valuable time (or stuff they bought). But if it's someone else's work, apparently it's not so important to consider their take on copyright, because it's freely available online so "it's their own fault to upload it lol".

Another issue is the chilling effect: I for one have become more cautious sharing some of my work on the internet, specifically because I don't want it to be fed into "AI"s. I want to share it with other humans, but not with exploitative corporations. Do you know a way for me to achieve this goal (sharing with humans but not "AI") in today's internet? I don't see a solution currently. So the EFF's take on this prevents people (me) from freely sharing their stuff with everyone, which would otherwise be something they would encourage and I would like to do.

Unions would probably work, as long as you get some people the company doesn't want to replace in there too

Maybe also federal regulations, although would probably just slow it because models are being made all around the world, including places like Russia and China that the US and EU don't have legal influence over

Also, it might be just me, but it feels like generative AI progress has really slowed, it almost feels like we're approaching the point where we've squeezed the most out of the hardware we have and now we just have to wait for the hardware to get better

the true chad is on the left.

Hyper-realistic is the least interesting style imo, we have cameras for that

generative ai also ruins idents and mabye even diagrams.

I don't see a problem with Generative AI, because it's just going to be a great tool for companies to add graphics real fast to their products. I don't see it replacing regular art, since "AI art" is just a natural progression for endless content that you can already scroll on social media when you are bored.