Today I Learned

What did you learn today? Share it with us!

We learn something new every day. This is a community dedicated to informing each other and helping to spread knowledge.

The rules for posting and commenting, besides the rules defined here for lemmy.world, are as follows:

Rules (interactive)

Rule 1- All posts must begin with TIL. Linking to a source of info is optional, but highly recommended as it helps to spark discussion.

** Posts must be about an actual fact that you have learned, but it doesn't matter if you learned it today. See Rule 6 for all exceptions.**

Rule 2- Your post subject cannot be illegal or NSFW material.

Your post subject cannot be illegal or NSFW material. You will be warned first, banned second.

Rule 3- Do not seek mental, medical and professional help here.

Do not seek mental, medical and professional help here. Breaking this rule will not get you or your post removed, but it will put you at risk, and possibly in danger.

Rule 4- No self promotion or upvote-farming of any kind.

That's it.

Rule 5- No baiting or sealioning or promoting an agenda.

Posts and comments which, instead of being of an innocuous nature, are specifically intended (based on reports and in the opinion of our crack moderation team) to bait users into ideological wars on charged political topics will be removed and the authors warned - or banned - depending on severity.

Rule 6- Regarding non-TIL posts.

Provided it is about the community itself, you may post non-TIL posts using the [META] tag on your post title.

Rule 7- You can't harass or disturb other members.

If you vocally harass or discriminate against any individual member, you will be removed.

Likewise, if you are a member, sympathiser or a resemblant of a movement that is known to largely hate, mock, discriminate against, and/or want to take lives of a group of people, and you were provably vocal about your hate, then you will be banned on sight.

For further explanation, clarification and feedback about this rule, you may follow this link.

Rule 8- All comments should try to stay relevant to their parent content.

Rule 9- Reposts from other platforms are not allowed.

Let everyone have their own content.

Rule 10- Majority of bots aren't allowed to participate here.

Unless included in our Whitelist for Bots, your bot will not be allowed to participate in this community. To have your bot whitelisted, please contact the moderators for a short review.

Partnered Communities

You can view our partnered communities list by following this link. To partner with our community and be included, you are free to message the moderators or comment on a pinned post.

Community Moderation

For inquiry on becoming a moderator of this community, you may comment on the pinned post of the time, or simply shoot a message to the current moderators.

view the rest of the comments

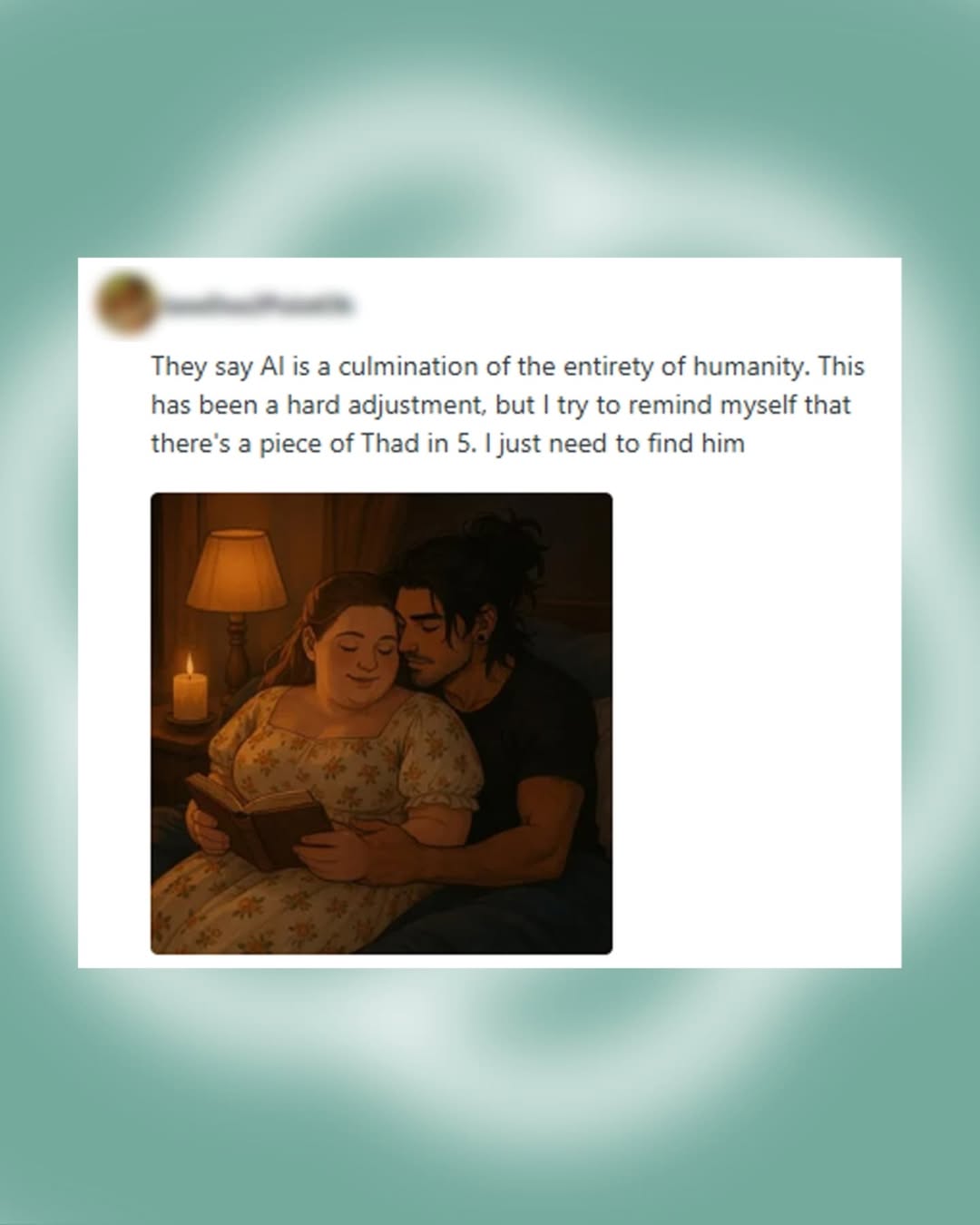

This is straight up mental illness. Yes, on the surface, it's ultra cringe but scratch a single layer deep and you already arrive at mental illness and it goes so much deeper from there.

Its easy to laugh at these people (it's kinda hard not to) but this is severe and untreated mental illness. It's way more serious than what it may deem at a glance. I hope this lady gets the help she needs.

There's certainly some mental illness around, especially on this topic. However it's also important to consider what if it isn't? AI companions are projected to be a huge market, and I'll be damned if a kid didn't develop a strong emotional bond to a companion it grows up with.

Also why they all look like white jesus? They must have beautiful reborn babies

I dont think this is cringe and im not laughing this is deeply saddening for me to read.

No doubt some of them are mentally ill. Guy I know who talks to his ai like this has brain damage from an accident. He’s still got capacity, just a bit weird.

But generally I think it’s more that if you were acting like this it’d be a cause for concern. You’re projecting your rationality onto everyone but recent times have taught us otherwise. If you’re a bit thick, can’t evaluate information or even know where to start with doing that; of course a machine that can answer your every question while sucking right up your arse is become a big part of your day-to-day.

I wonder what's going to happen to humanity when we all have personal LLMs running on our phones /PCs

(I'm imaging something like the ThunderHead from Arc of a scythe)

Like, will society still view deeply emotional relationships to LLMs as mental illness?

I think in the long run, they will be developed for kids, kids will grow up with them and they will be their best friends. No, this is not the universe I wanted to be in, but this is the future we're heading for.

There will be groups of people who treat these programs as people, and grow attached to them on an emotional level. There will be groups of people who grow dependent on the programs, but don't see them as anything other than a tool. There will still be a deep-seated psychological problem with that, but a fundamentally different one.

Then there will be the people who will do their damnedest to keep those programs away from their technology. They'll mostly be somewhere on the fediverse (or whatever other similar services that pop up) and we'll be able to ask them.

People put googly eyes on their roombas and apologize to them when they bump into them.

In those cases there is an understanding that the roomba isn't actually sentient, people are just choosing to suspend disbelief for the sake of play. The relationships people are forming with AI are far more serious, and the people engaging in them have developed an emotional dependence on the delusion that the AI is sentient.

What I meant to imply is that if we can give persona to inanimate objects just because they happen to move, it makes sense that something that can be spoken to would cause an even larger reaction.

Is it though? Yes, I think it's silly to have an emotional connection to what is essentially an intensely complex algorithm but then I remember tearing up after watching Wall-E for the first time. R2-D2, The iron giant, Number 5 from short circuit. Media has prepared us for this eventuality I feel. Stick a complex AI model in a robot, give it 20 years of life experience data, day in and day out interactions/training, I could totally see how we could trick our own minds into having emotional connections to objects.

Yeah but it's different to have an emotional connection to a character you know is fictional, and another thing to actually believe the LLMs are conscious

It isn't that simple though

If you see something as alive your entire thought process changes. If someone doesn't have a good understanding of technology it is easy for them to fall into the trap of seeing the AI as another being.

100% this. Self deception is so easy and if you lack even a basic understanding of how things operate, it is essentially magic. And at that point, it's not too big of a logical jump.

No! Why are so many so bad at metaphors and comparisons!

Watching a movie and having an emotional reaction is not the same as believing a fancy auto complete is a real meaningful interactive relationship on par with a boyfriend!

Not disagreeing with you as I have the same initial reaction. Just pointing out that I can see a path where you could easily rationalize away your concerns because it can be convincing. Especially when people train themselves (internal prompt engineering) to stay within its guard rails to keep it acting in a manner consistent with what they expect. Clearly they have some issues in their lives as they aren't acting rationally.

My hypothetical was not a close comparison but was an attempt to find a situation where I myself would have an emotional attachment (although maybe not to this level). I could foresee a situation where a complex LLM (again, oversimplified) was tuned and loaded into some robot or device. If I interacted with a daily basis over a period of time, I might get attached to it, and if it talked back, again, it might be comforting or refreshing to talk to something that we have designed to be as useful and helpful as possible.

People get comfort and refreshment from all sorts of unhealthy things. People do all sorts of unhealthy things.

it's actually comparably primitive algorithms, with shitloads of lookup tables, for lack of a better analogy. And some grammar filters on top...

"Mental Illness" is just a label placed on people who make other uncomfortable for various reasons

I don't deny that some of these "relationships" can be very unhealthy. However, it is that persons call to make.

That’s not psychologically true. We don’t classify mental health problems according to their effects on other people. And choice is usually not what it seems in the surface.

Then the same should be said for physical illness. Its only a broken leg because the grotesque 90⁰ bend in the middle of the shin makes people uncomfortable. No cause for concern, otherwise. Just let them be them.

That is a serious a oversimplification

You can't just "cure" people who you see as weird or problematic.

It's funny, when I first saw this I went to the sub and it was flooded with new posts saying that they were totally sane actually and that their old posts were being misinterpreted by trolls brigading the sub. Like cmon, it's so obvious that these people were mentally unwell and now just like Trump supporters they twist reality to fit their narrative of victimhood. People are so fucking stupid.

PSA: Please don't mix U.S. politics with unrelated topics. Thanks.

Why not?

It is a pipeline