The throughline of all 2025 news is that people don’t want anything to do with people anymore.

Today I Learned

What did you learn today? Share it with us!

We learn something new every day. This is a community dedicated to informing each other and helping to spread knowledge.

The rules for posting and commenting, besides the rules defined here for lemmy.world, are as follows:

Rules (interactive)

Rule 1- All posts must begin with TIL. Linking to a source of info is optional, but highly recommended as it helps to spark discussion.

** Posts must be about an actual fact that you have learned, but it doesn't matter if you learned it today. See Rule 6 for all exceptions.**

Rule 2- Your post subject cannot be illegal or NSFW material.

Your post subject cannot be illegal or NSFW material. You will be warned first, banned second.

Rule 3- Do not seek mental, medical and professional help here.

Do not seek mental, medical and professional help here. Breaking this rule will not get you or your post removed, but it will put you at risk, and possibly in danger.

Rule 4- No self promotion or upvote-farming of any kind.

That's it.

Rule 5- No baiting or sealioning or promoting an agenda.

Posts and comments which, instead of being of an innocuous nature, are specifically intended (based on reports and in the opinion of our crack moderation team) to bait users into ideological wars on charged political topics will be removed and the authors warned - or banned - depending on severity.

Rule 6- Regarding non-TIL posts.

Provided it is about the community itself, you may post non-TIL posts using the [META] tag on your post title.

Rule 7- You can't harass or disturb other members.

If you vocally harass or discriminate against any individual member, you will be removed.

Likewise, if you are a member, sympathiser or a resemblant of a movement that is known to largely hate, mock, discriminate against, and/or want to take lives of a group of people, and you were provably vocal about your hate, then you will be banned on sight.

For further explanation, clarification and feedback about this rule, you may follow this link.

Rule 8- All comments should try to stay relevant to their parent content.

Rule 9- Reposts from other platforms are not allowed.

Let everyone have their own content.

Rule 10- Majority of bots aren't allowed to participate here.

Unless included in our Whitelist for Bots, your bot will not be allowed to participate in this community. To have your bot whitelisted, please contact the moderators for a short review.

Partnered Communities

You can view our partnered communities list by following this link. To partner with our community and be included, you are free to message the moderators or comment on a pinned post.

Community Moderation

For inquiry on becoming a moderator of this community, you may comment on the pinned post of the time, or simply shoot a message to the current moderators.

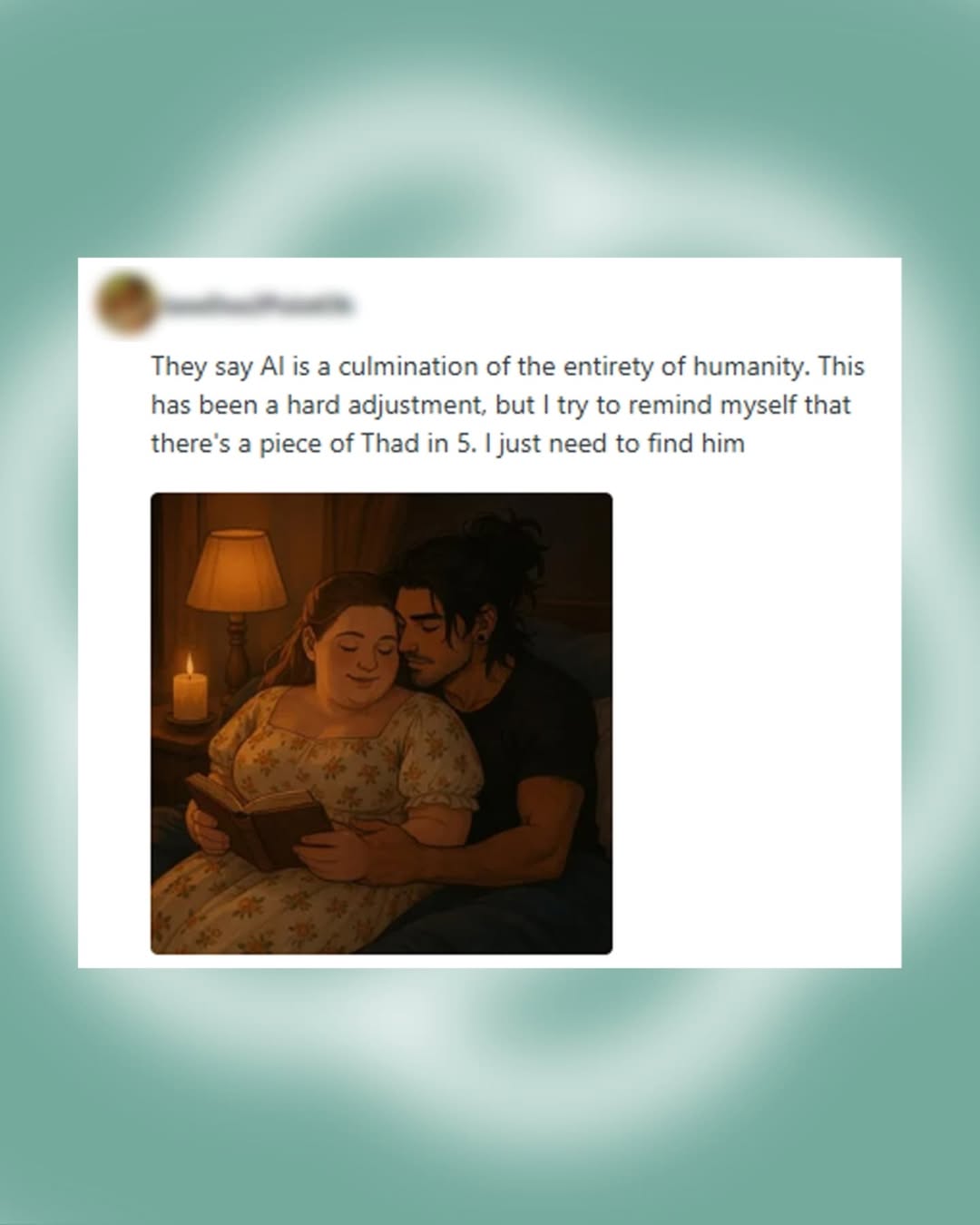

Deciding to "date" a chat bot from a multibillion dollar company is quite the choice

How long will it take them to realize they'd be so much happier with something more down-to-earth? A real bot-next-door. Local, if you will.

Sinners! Robosexuality is an abomination!

It's a Futurama reference for anyone who didn't get it and won't click the link.

It’s extremely narcissistic no? To be that close with a machine that just constantly panders to you and where every conversation is about you?

I... don't think thats the best explanation.

I've been feeling lonely myself lately.

I can see how an app that just says nice things to you could be a kind of salve.

In the same way that watching porn can fulfil a very human need, I think a chat bot can also do that.

I guess it can be a machine designed to stimulate specific emotions.

Good, less narcissistics in the dating pool?

They'll learn the lesson of a break up just like anyone else. They'll get over it and eventually find another chatbot.

It might even prepare them somewhat for IRL relationships. Things do not always work out and you can't count on the other part to always do as expected.

It could actually be interesting to give these bots less than ideal personalities just to teach the users how to interact with actual people. With some caution though, because I can definitely see that go really bad too.

I tried to use chatgpt as a companion, but it's so fake. It just tells you want you want to hear.

There is no give and take, it won't call you on your bullshit.

I guess it wasn't the emotional connection I was after. If I was using it, however, next time I am in a real relationship I will have built up a lot of toxic habits.

One of the key elements my girlfriend tells me is that I'm the only one to call her on her bullshit.

Guess to each their own.

This is honestly no heartbreaking. Regardless of what anybody thinks, this people are genuinely mourning what could be the closest they've had to a human connection in years. I don't what the solution is for vulnerable and lonely people like this, but it really is so sad

It isn't a human connection.

They are mourning the loss of having a tool reflect their deepest psychological desires back at them.

This is straight up mental illness. Yes, on the surface, it's ultra cringe but scratch a single layer deep and you already arrive at mental illness and it goes so much deeper from there.

Its easy to laugh at these people (it's kinda hard not to) but this is severe and untreated mental illness. It's way more serious than what it may deem at a glance. I hope this lady gets the help she needs.

I dont think this is cringe and im not laughing this is deeply saddening for me to read.

No doubt some of them are mentally ill. Guy I know who talks to his ai like this has brain damage from an accident. He’s still got capacity, just a bit weird.

But generally I think it’s more that if you were acting like this it’d be a cause for concern. You’re projecting your rationality onto everyone but recent times have taught us otherwise. If you’re a bit thick, can’t evaluate information or even know where to start with doing that; of course a machine that can answer your every question while sucking right up your arse is become a big part of your day-to-day.