It isn't actually smart, or thinking. It's just statistics.

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

right? AI didn't pass the Turing test, Humans fucking failed it.

Not that the turing test is meaningful or scientific at all...

just like the vast majority of people

Soon to be built upon sarcastics.

I'm not sure why that's a relevant distinction to make here. A statistical model is just as capable of designing (for instance) an atom bomb as a human mind is. If anything, I would much rather the machines destined to supplant me actually could think and have internal worlds of their own, that is far less depressing.

It's relevant in the sense of its capability of actually becoming smarter. The way these models are set up at the moment puts a mathematical upper limit to what they can achieve. We don't quite know exactly where, but we know that each step taken will take significantly more effort and data than the last.

Without some kind of breakthrough w.r.t. how we model these things (so something other than LLMs), we're not going to see AI intelligence skyrocket.

A statistical model is just as capable of designing (for instance) an atom bomb as a human mind is.

No. A statistical model is designed by a human mind. It doesn't design anything on its own.

If it got smarter it could tell you step by step how an AI would take control over the world, but wouldn't have the conscience to actually do it.

Humans are the dangerous part of the equation in this case.

a meat brain is also a stastistical inference engine.

There's a kind of scam that some in the AI industry have foisted on others. The scam is "this is so good, it's going to destroy us. Therefore, we need regulation to prevent Roko's Basilisk or Skynet." LLMs have not gotten better in any significant degree. We have the illusion that they have because LLM companies keep adding shims that, for example, use Python libraries to correctly solve math problems or use an actual search engine on your behalf.

LLM development of new abilities has stagnated. Instead, the innovation seems to be in making models that don't require absurd power draws to run. (Deep Seek being a very notable, very recent example.)

I watched this video all the way through hoping they would turn things around but it's just the same fluff for a new audience.

The Checkmate Model Omega

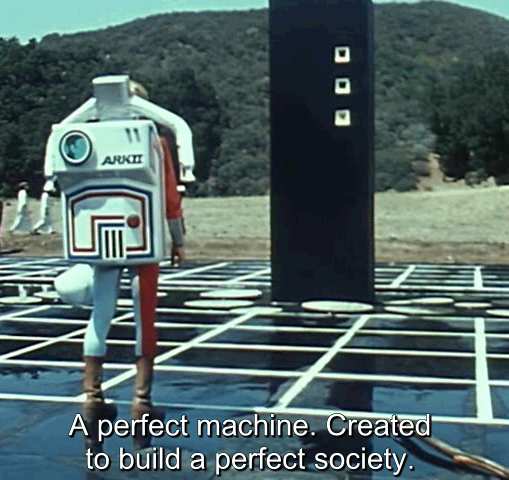

What's this from?

A tv show called Ark II

Ark II is an American live-action science fiction television series, aimed at children, that aired on CBS from September 11 to December 18, 1976,

Thank you

We all get AI robot panthers to ride around on?

You know what? I think I'm OK with that...

rational animations

Figures