In my case they're facing a 100% revenue reduction regardless of when (or whether) it's cracked.

I'm never going to buy denuvo infested malware, and developers and publishers who try to pull this shit go straight into the blacklist.

In my case they're facing a 100% revenue reduction regardless of when (or whether) it's cracked.

I'm never going to buy denuvo infested malware, and developers and publishers who try to pull this shit go straight into the blacklist.

In my case they're facing a 100% revenue reduction regardless of when (or whether) it's cracked.

I'm never going to buy denuvo infested malware, and developers and publishers who try to pull this shit go straight into the blacklist.

Reddit is running on a potato.

Lemmy is running on several distributed potatoes, with a much smaller user load per tuber (and many orders of magnitude less bots).

The other day we were going over some SQL query with a younger colleague and I went “wait, what was the function for the length of a string in SQL Server?”, so he typed the whole question into chatgpt, which replied (extremely slowly) with some unrelated garbage.

I asked him to let me take the keyboard, typed “sql server string length” into google, saw LEN in the except from the first result, and went on to do what I'd wanted to do, while in another tab chatgpt was still spewing nonsense.

LLMs are slower, several orders of magnitude less accurate, and harder to use than existing alternatives, but they're extremely good at convincing their users that they know what they're doing and what they're talking about.

That causes the people using them to blindly copy their useless buggy code (that even if it worked and wasn't incomplete and full of bugs would be intended to solve a completely different problem, since users are incapable of properly asking what they want and LLMs would produce the wrong code most of the time even if asked properly), wasting everyone's time and learning nothing.

Not that blindly copying from stack overflow is any better, of course, but stack overflow or reddit answers come with comments and alternative answers that if you read them will go a long way to telling you whether the code you're copying will work for your particular situation or not.

LLMs give you none of that context, and are fundamentally incapable of doing the reasoning (and learning) that you'd do given different commented answers.

They'll just very convincingly tell you that their code is right, correct, and adequate to your requirements, and leave it to you (or whoever has to deal with your pull requests) to find out without any hints why it's not.

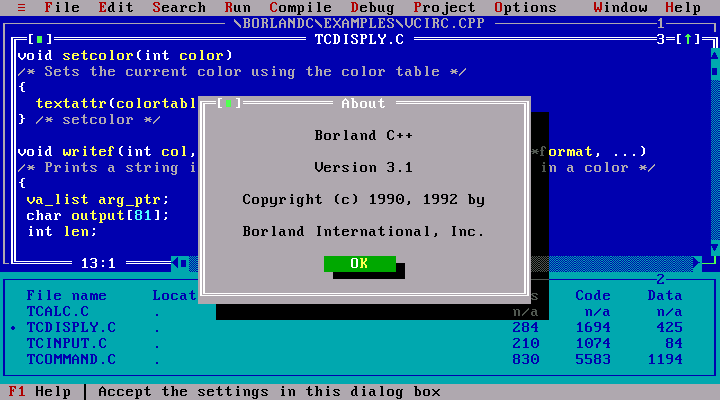

You might not like it, but Borland yellow on blue was peak IDE design.

Personally, I have downloaded Borland themes for all my IDEs. I don't use them, of course, because I'm not entirely insane and I value my eyesight too much, but I have downloaded them.

Because it'll look bad for NASA if people are stranded in the ISS (plus, I assume they have to foot the bill for any resulting extra resupply missions).

Also, if I'm not mistaken, NASA authorised the launch, while knowing the craft was faulty and leaking and the company malignantly incompetent, so it's partly their fault, too, or at least they were necessary accomplices.

What is it with humans and eating, smoking, or drinking any kind of leaf, seed, or other vegetable that has evolved a deadly toxin to avoid being eaten..?

“You know what, this tastes a bit bland, let's add some insecticide to it to make it spicier!”

Asimov didn't design the three laws to make robots safe.

He designed them to make robots break in ways that'd make Powell and Donovan's lives miserable in particularly hilarious (for the reader, not the victims) ways.

(They weren't even designed for actual safety in-world; they were designed for the appearance of safety, to get people to buy robots despite the Frankenstein complex.)

The anthropologists got it wrong when they named our species Homo sapiens ('wise man'). In any case it's an arrogant and bigheaded thing to say, wisdom being one of our least evident features. In reality, we are Pan narrans, the storytelling chimpanzee.

— Sir Terry Pratchett, The Globe (The Science of Discworld, #2), 2002

LLM's are not AI, though. They're just fancy auto-complete. Just bigger Elizas, no closer to anything remotely resembling actual intelligence.

“But the plans were on display…”

“On display? I eventually had to go down to the cellar to find them.”

“That’s the display department.”

“With a flashlight.”

“Ah, well, the lights had probably gone.”

“So had the stairs.”

“But look, you found the notice, didn’t you?”

“Yes,” said Arthur, “yes I did. It was on display in the bottom of a locked filing cabinet stuck in a disused lavatory with a sign on the door saying ‘Beware of the Leopard.

— Douglas Adams, The Hitchhiker's Guide to the Galaxy

This guy doesn't even know how to breathe without doing something illegal, does he..?